At the recent Consumer Electronics Show (CES) 2024, Nvidia unveiled significant advancements in its generative AI-powered Non-Playable Characters (NPCs), a development that could revolutionize the gaming industry.

Through Nvidia’s Avatar Cloud Engine (ACE), these NPCs combine sophisticated technologies like speech-to-text recognition, text-to-speech responses, generative AI facial animation, and automated character personas. This blend of technology is set to create more dynamic and responsive gaming experiences.

Nvidia’s presentation, led by Seth Schneider, the senior product manager of ACE, demonstrated the intricate workings of this technology. The process starts with interpreting a player’s speech, converting it into text. This text is then processed by a cloud-based large-language model, which generates a response from the NPC. The response is synced with Omniverse Audio2Face, ensuring accurate lip-syncing with the generated spoken audio, culminating in a seamless in-game rendering.

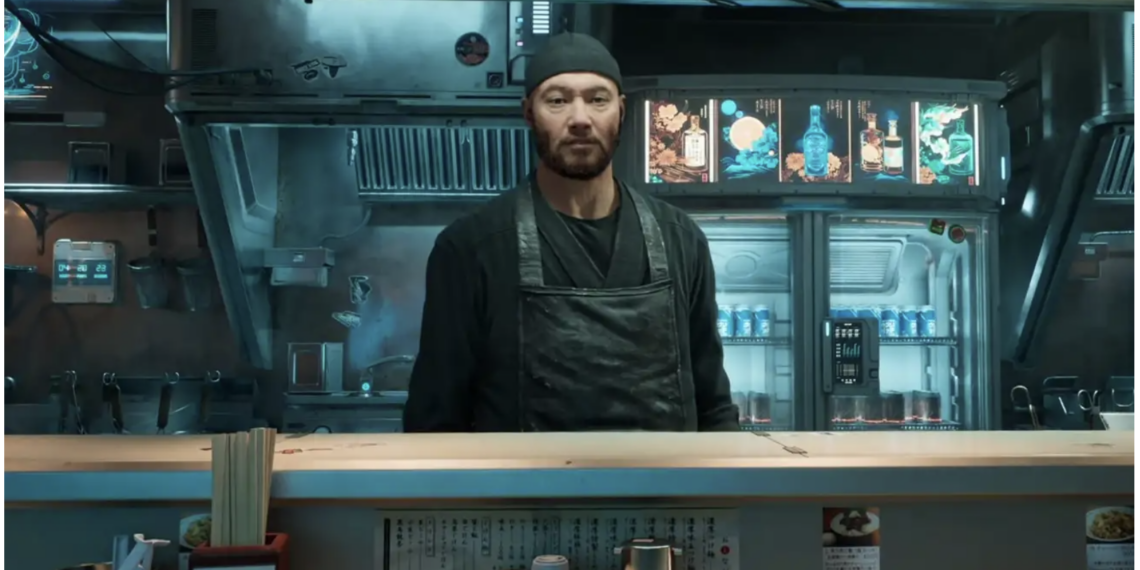

This demonstration marks an evolution from the technology showcased at 2023’s Computex. The previous demo featured a basic interaction with a futuristic ramen shop owner named Jin. The latest CES 2024 demo expanded this concept, showing Jin and another NPC, Nova, engaging in AI-generated conversations that can vary with each playthrough. This advancement suggests a future where each gaming session could offer unique interactive experiences with NPCs.

Beyond Conversation: Environment-Aware AI NPCs

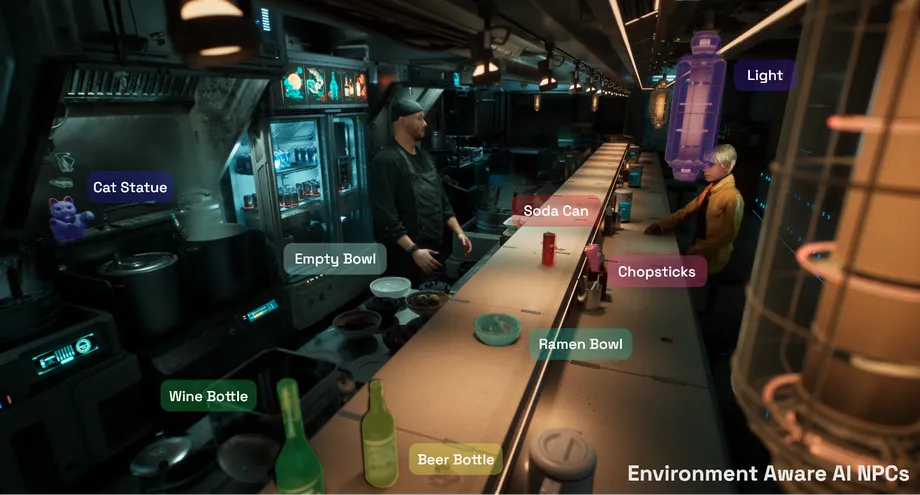

An additional breakthrough came from Convai, a company working in tandem with Nvidia. Their technology enables AI-powered NPCs to interact with objects in their environments.

For instance, in the demo, Jin is shown pulling out a bottle when instructed to “bring out the good stuff.” This level of interaction suggests NPCs could engage with various scene elements like bowls, bottles, lights, and other props, adding depth to the gaming environment.

Nvidia has revealed that several prominent game developers are already using its ACE production services. This includes renowned names like Genshin Impact publisher Mihoyo, NetEase Games, Tencent, and Ubisoft. These collaborations indicate a strong industry interest in integrating AI-powered NPCs into future gaming products.

Potential and Challenges Ahead

While the technology is promising, it’s still unclear which specific games will incorporate these AI-generated NPCs. Nvidia and Convai assert that the tech will integrate seamlessly with popular game engines like Unreal Engine and Unity.

However, there is some skepticism about the quality of these interactions. In the demos, characters like Jin and Nova, despite generating almost convincing conversations, still sounded somewhat robotic and uncanny.

This emerging technology raises intriguing questions about the future of gaming. It suggests a shift towards more AI-driven interactions, potentially replacing traditional NPC scripting. As this technology evolves, it may challenge our perceptions of in-game characters, blurring the line between AI-generated and human-crafted interactions.

“Explore the Imaginative World of Toy Trains in VR”: Dive into a world where childhood memories come to life. Discover our in-depth review of Toy Trains, a VR game that masterfully combines puzzle-solving with the joy of model train building.